The need to control projects (or bodies of work that we would call a project today) extends back thousands of years. Certainly the Ancient Greeks and Romans used contracts and contractors for many public works. This meant the contractors needed to manage the work within a predefined budget and an agreed timeframe. However, what was done to control projects before the 19th century is unclear – ‘phase 0’. But from the 1800’s onward there were three distinct phases in the control processes.

Phase 1 – reactive

The concept of using charts to show the intended sequence and timing of the work became firmly established in the 19th century and the modern bar chart was in use by the start of the 20th century. One of the best examples is from a German project in 1910, see: Schürch . A few years later Henry Gantt started publishing his various charts.

From a controls perspective, these charts were static and reactive. The diagrams enabled management to see, in graphic form, how well work was progressing, and indicated when and where action would be necessary to keep the work on time. However, there is absolutely no documented evidence that any of these charts were ever used as predictive tools to determine schedule outcomes. To estimate the completion of a project, a revised chart had to be drawn based on the current knowledge of the work – a re-estimation process; however, there is no documentation to suggest even this occurred regularly. The focus seemed to be using ‘cheap labour’ to throw resources at the defined problem and get the work back onto program.

Costs management seems to have be little different; the reports of the Royal Commissioners to the English Parliament on the management of the ‘Great Exhibition’ of 1851 clearly show the accurate prediction of cost outcomes. Their 4th report predicted a profit of ₤173,000. The 5th and final report defined the profit as ₤186,436.18s. 6d. However this forward estimation of cost outcomes does not seem to have transitioned to predicting time outcomes, and there is no real evidence as to how the final profit was ‘estimated’. (See Crystal Palace).

Phase 2 – empirical logic

Karol Adamiecki’s Harmonygraph (1896) introduced two useful concepts to the static views used in bar charts and the various forms of Gantt chart. In a Harmonygraph, the predecessors of each activity are listed at the top and the activities timing and duration are represented by vertical strips of paper pinned to a date scale. As the project changed, the strips could be re-pinned and an updated outcome assessed.

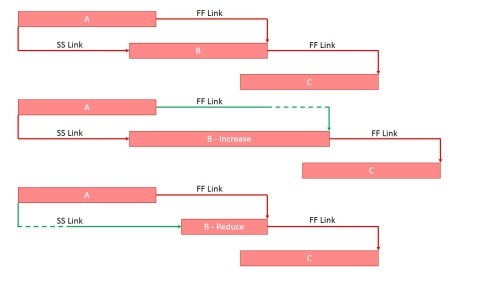

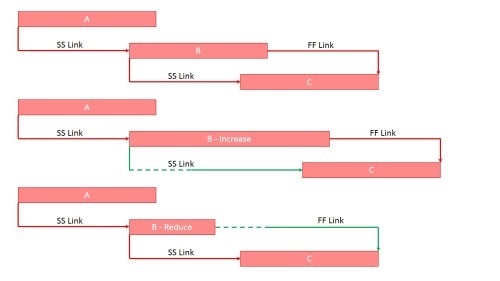

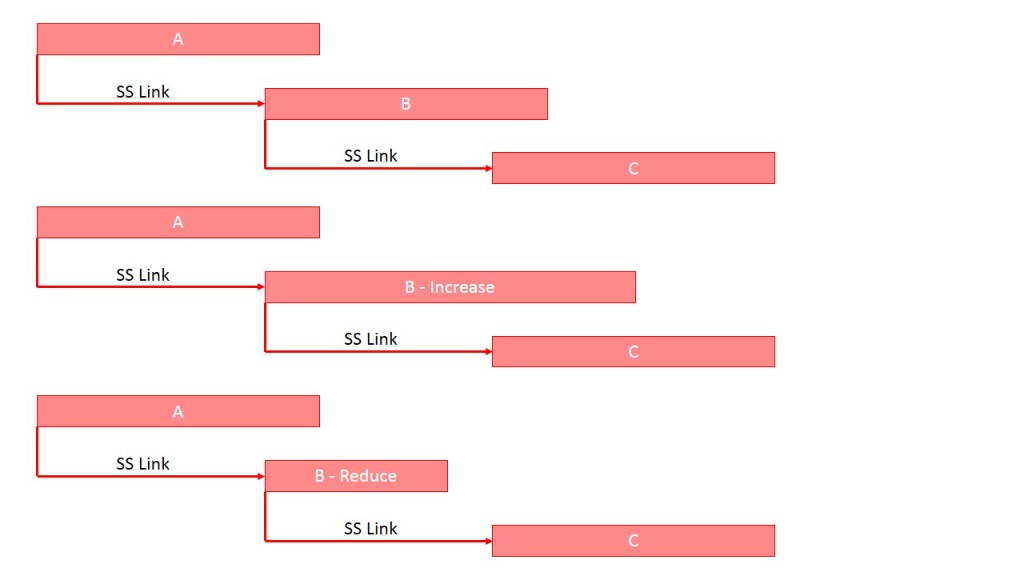

The first step towards a true predictive process to estimate schedule completion based on current performance was the development of PERT and CPM in the late 1950s. Both used a logic based network to define the relationship between tasks, allowing the effect of the current status at ‘Time Now’ to be cascaded forward and a revised schedule completion calculated. The problem with CPM and PERT is the remaining work is assumed to occur ‘as planned’ no consideration of actual performance is included in the standard methodology. It was necessary to undertake a complete rescheduling of the project to assess a ‘likely’ outcome.

Cost controls had been using a similar approach for a considerable period. Cost Variances could be included in the spreadsheets and cost reports and their aggregate effect demonstrated, but it was necessary to re-estimate future cost items to predict the likely cost outcome.

Phase 3 – predictive calculations

The first of the true predictive project controls processes was Earned Value (EV). EV was invented in the early 1960s and was formalised in the Cost Schedule Controls System Criteria issued by US DoD in December 1967. EV uses predetermined performance measures and formula to predict the cost outcome of a project based on performance to date. Unlike any of the earlier systems a core tenet of EV is to use the current project data to predict a probable cost outcome – the effect of performance efficiencies to date is transposed onto future work. Many and varied assessments of this approach have consistently demonstrated EV is the most reliable of the options for attempting to predict the likely final cost of a project.

Unfortunately EV in its original format was unable to translate its predictions of the final cost outcome (EAC) into time predictions. On a plotted ‘S-Curve’ it was relatively easy to measure the time difference between when a certain value was planned to be earned and when it was earned (SV time) but the nature of an ‘S-Curve’ meant the current SVt had no relationship to the final time variance. A similar but different issue made using SPI equally unreliable. The established doctrine was to ‘look to the schedule’ to determine time outcomes. But the schedules were either at ‘Phase 1’ or ‘Phase 2’ capability – not predictive.

A number of options were tried through the 1960s, 70s and 80s to develop a process that could accurately predict schedule completion based on progress to date. ‘Count the Squares’ and ‘Earned Time’ in various guises to name two. Whilst these systems could provide reasonable information on where the project was at ‘time now’ and overcame some of the limitations in CPM to indicate issues sooner than standard CPM (eg, float burn hiding a lack of productivity), none had a true predictive capability.

The development of Earned Schedule resolved this problem. Earned Schedule (ES) is a derivative of Earned Value, uses EV data and uses modified EV formula to create a set of ‘time’ information that mirrors EV’s ‘cost’ information to generate a predicted time outcome for the project. Since its release in 2003 studies have consistently shown ES to be as accurate in predicting schedule outcomes as EV is in predicting cost outcomes. In many respects this is hardly surprising as the underlying data is the same for EV and ES and the ES formula are adaptations of the proven EV formula (see more on Earned Schedule).

Phase 4 – (the future) incorporating uncertainty

The future of the predictive aspects of project controls needs to focus on the underlying uncertainty of all future estimates (including EV and ES). Monte Carlo and similar techniques need to become a standard addition to the EV and ES processes so the probability of achieving the forecast date can be added into the information used for project decision making. Techniques such as ‘Schedule Density‘ move project controls into the proactive management of uncertainty but again are rarely used.

Summary:

From the mid 1800s (and probably much earlier) projects and businesses were being managed against ‘plans’. The plans could be used to identify problems that required management action, but they did not predict the consequential outcome of the progress being achieved. Assessing a likely outcome required a re-estimation of the remaining work, which was certainly done for the cost outcome on projects such as the construction of the Crystal Palace.

The next stage of development was the use of preceding logic, prototyped by Karol Adamiecki’s Harmonygraph, and made effective by the development of CPM and PERT as dynamic computer algorithms in the late 1950s. However, the default assumption in these ‘tools’ was that all future work would proceed as planned. Re-scheduling was needed to change future activities based on learned experience.

The ability to apply a predictive assessment to determine cost outcomes was introduced through the Earned Value methodology, developed in the early 1960s and standardised in 1967. However, it was not until 2003 that the limitations in ‘traditional EV’ related to time was finally resolved with the publication of ‘Earned Schedule’.

In the seminal paper defining ES, “Schedule is Different”, the concept of ES was defined as an extension of the graphical technique of schedule conversion (that had long been part of the EVM methodology). ES extended the simple ‘reactive statement’ of the difference between ‘time now’ and the date when PV = EV, by using ‘time’ based formula, derived from EV formula, to predict the expected time outcome for the project.

The Challenge

The question every project controller and project manager needs to take into the New Year is why are more then 90% of project run using 18th century reactive bar charting and the vast majority of the remainder run using 60 year old CPM based approaches, non of which offer any form of predictive assessment. Don’t they want to know when the project is likely to finish?

It’s certainly important to understand where reactive management is needed to ‘fix problems’, but it is also important to understand the likely project outcome and its consequences so more strategic considerations can be brought into play.

Prediction is difficult (especially about the future) but it is the only way to understand what the likely outcome will be based on current performance, and therefore support value based decision making focused on changing the outcome when necessary.

I have not included dozens or references in this post, all of the papers are available at http://www.mosaicprojects.com.au/PM-History.html